New cluster computer at the Albert Einstein Institute makes black holes calculable

A centre of excellence for scientific computing is being established in Potsdam-Golm

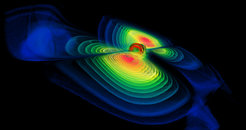

Almost 100 years after Albert Einstein developed the theory of relativity, our knowledge of gravitational physics has grown rapidly. Einstein, for example, had strong reservations about the existence of black holes. Today we are certain that they exist. This certainty regarding black holes is due, inter alia, to advances in computer research. Ever faster computers today enable scientists to test complex concepts and theories of gravitational physicists by simulating realistic astrophysical systems and visualizing them three-dimensionally.

But fast is sometimes not fast enough. At the Max Planck Institute for Gravitational Physics (Albert Einstein Institute, AEI) in Golm, an international centre of relativity theory, some of the leading minds in gravitational physics are to be found. Research there has entered into a dimension that exceeds the capacity of the high-performance SGI CRAY Origin 2000 computer. As a transitional measure, computation runs have therefore been carried out at international high performance computing centres, such as the NCSA in the USA. However, since this is associated with a high level of processing expenses, AEI has now procured a high-performance Linux computer cluster with approximately 20 times the power of the CRAY.

“We are happy to now be able to test our theories and models, e.g. concerning astrophysical events, such as the merging of two black holes, directly at the Institute and thus to work faster and more effectively,” stated Prof. Bernard Schutz, Managing Director of the AEI. “We are also thereby making a decisive contribution to the progress of international large-scale projects such as GEO600 (earth-bound gravitational wave detector) or LISA (gravitational wave measurement in space).”

PEYOTE

The new computer cluster at AEI received the name “PEYOTE” from Prof. Seidel’s Numerical Relativity Theory Group – short for "Parallel Execute Your Own Theoretical Equation." Peyote is also the name of a cactus species native mainly to Mexico. The name is therefore relevant in as much as the programme that is primarily used on the cluster is referred to world-wide under the name CACTUS.

The main users are the scientists at the Department of Astrophysical Relativity and, in particular, the Numerical Relativity Group under the direction of Prof. Seidel. However, scientists from other groups and cooperating partners are also users of this high-performance cluster.

The cluster is particularly suitable for problems that can be parallelized. These are matrix operations, as they are also primarily used for simulation calculations. In such instances, the individual nodes of the cluster must be able to communicate with one another with particular speed and effectiveness. The main research area of the Numerical Relativity Group is astrophysically interesting cases such as the collision of black holes or neutron stars. The results of these simulation calculations are visualised either on the head node of the cluster or on work stations that are particularly suitable for graphics output.

The Max Planck Institute for Gravitational Physics

The Max Planck Institute for Gravitational Physics (Albert Einstein Institute) in Potsdam-Golm has established itself as a leading international research centre for gravitational physics since it was founded in 1995. Over 90 scientists and more than 150 visitors each year investigate all aspects of gravitational physics.

Cluster/technical data

A high-performance Linux Compute Cluster is being used. It consists of 64 computing nodes, each with 2 Intel XEON P4 processors, each with a power of 2.66 GHz and 2 GB RAM, as well as a local storage capacity of 120 GB pro per node.

Four so-called storage nodes record the large quantities of results data on an approximately 5 TB storage system. Three networks ensure communication between the individual computers. Each of these networks has its own very special task.

The core of the high-performance cluster is the network and thereby the corresponding switch that provides for inter-process communication. This switch was provided by the Force10Networks company. In this regard, special importance is placed on short latency periods and delay-free data transmission, both of which are guaranteed by GigaBit Ethernet technology.

Although today Myrinet, a high-performance switching technology, often plays an important role, the choice was made in favour of GigaBit, because it is more or less the standard and therefore also promises favourable extension possibilities in the future and was found to have an optimal price/performance ratio.

Because typical computer runs take several days or even weeks, the runs are administered by a batch system. The users use management nodes to communicate with the cluster. It is there that programs are compiled and the results are visualised on these nodes. The CACTUS Code (www.cactuscode.org) developed by AEI, a flexible selection of tools, makes it easy for all scientists involved to formulate problems in a computer-friendly way and to have calculations carried out.

A second expansion stage of the high performance cluster is already being planned, so that at the end of 2003 the cluster will consist of 128 PC nodes with at least 12 TB of memory. An expansion to 250 PC nodes is planned for the cluster.

Details

Each computer node has three network cards for three specific networks.

The most important is the InterconnectNetwork which, by using computer nodes with 1,000 Mbits (1Gbit), connects lines by means of a very powerful switch. A Force10Networks company switch is used (for a switch description see below). It has a back plane (BUS circuit board) capacity of 600 Mbits.

The second network, which is also very important, is used to transfer the results of individual nodes to the so-called storage nodes. Because of the enormous data output of the computer nodes, the best approach is to distribute the load over several nodes. For example, the output of 16 nodes is written to one storage node. The network uses an HP ProCurve 4108gl switch. It has a back plane of 36 Gbits. This is sufficient to deal with a load of 4 Mbytes from each [!] computer node at the same time.

The third network ensures that all components of the cluster can be operated. For this purpose a switch from the HP company has been used. In order to keep the cable length as short as possible, two further switches are used in this network. The manufacturer of these is the 3Com company.

Cooling of the cluster

Because of the small space required for the equipment, SlashTwo housing was chosen. This packed form requires special consideration of air flows in the housing, since the processors give off an enormous amount of heat that has to be transported away as quickly as possible.

The temperature of the ambient air should not exceed 20°C.

It is necessary to ensure that an air volume of 4 x 1400 m2 is available and can be recirculated.

The existing air conditioning system can handle these values and has an output of ca. 50kW. The existing ceiling units are available as a reserve for especially sunny days in the summer months and provide an additional 24 kW.

Power supply

The cluster is supplied with 6x25A lines. A UPS (uninterrupted power supply) ensures an even power supply of the storage and head nodes for a period of 20 minutes.

Special software then ensures that these computers automatically shut down and are turned off.

Further specifications

Cluster

Pro Rack (19" cabinet):

Weight: 400 kg with 16 SlashTwo housing

240 kg for the network cabinet

250 kg for the rack with the storage nodes and head nodes, including the 6 TB storage units

Network

Force10Networks E600

Weight: 110 kg

Power consumption 2800 W

Waste heat: 1400W - 3500W

Network specifications

Back plane capacity: 600 Gbits

Special software

Although the cluster can be regarded as one unit, the individual components must nonetheless be capable of individual use as software. In this regard special software, so-called management software, simplified matters enormously. For this purpose the Megware company has developed a cluster management software by the name of Clustware.

Special features of the cluster

Peak performance of the Cluster

Theoretically: 128 x 2 x 2,66GHz = 680GFlops (128 CPUs, 2 floating point units per CPU, 2.66GHz per unit)

The true values will be reflected in the benchmarks.

A single PC, when used in the Cluster (2 CPUs) and available as a desktop workstation for individual scientists at the AEI, has a performance level of 10 Gflops.

The communication network is based on Gigabit Ethernet. This network card was chosen, based on the assumption that the development of Ethernet would continue. The InterprocessSwitch is already designed for 10 Gigabit Ethernet.

Comparison with other computers

The Institute also has an ORIGIN 2000 with a virtual reality graphic engine at its disposal. It was acquired in 1997 as a high-performance parallel computer, but can no longer keep up with today’s requirements.

The ORIGIN is equipped with 32 CPU and has 8GB memory. Its performance is that of 20 - 40 Gflops, which was excellent 3 years ago. Today the systems are no longer comparable, because a single PC, as used in the cluster (2 CPUs), already performs at a level of 10 Gflops. An actual comparison with other clusters and other high-performance systems will therefore only be made in the course of the next weeks and months.

We nonetheless here provide data concerning another cluster (High Performance Computing Center North (HPC2N)):

240 CPUs in 120 dual nodes

2 AMD Athlon MP2000+ processors

1 Tyan Tiger MPX motherboard

1-4 GB memory

1 Western Digital 20GB hard disk

1 fast Ethernet NIC

1 Wulfkit3 SCI

A peak performance of 800 Gflops is given for this cluster.